Ethical Concerns in AI for Startups: What Founders Must Know

Discover key ethical concerns in AI for Startups, including privacy, bias, transparency, security, and accountability. Learn how to build responsible AI systems.

Artificial intelligence has become a powerful engine for innovation, and more founders are integrating AI for Startups to gain a competitive edge. But as AI adoption grows, so do the ethical concerns that come with it. Startups often move fast, experiment quickly, and rely heavily on data-driven automation, which can unintentionally create risks around privacy, fairness, transparency, and accountability. Ethical missteps don’t just harm users—they can damage credibility, reduce investor trust, and invite legal challenges.

For emerging startups that aim to scale responsibly, understanding these ethical challenges is no longer optional. It is a foundational requirement for building AI systems that are safe, transparent, and aligned with user values. This guide explores the essential ethical concerns every startup should consider.

Why Ethics Matter in AI for Startups

Ethics is not just a “nice-to-have” element in artificial intelligence. It is the foundation that protects users, preserves brand reputation, and ensures that technology is used responsibly. For emerging companies, the long-term success of AI for Startups relies heavily on building systems that people trust and feel safe using. When users sense transparency and fairness, they are far more likely to adopt and recommend AI-driven products.

Startups typically move quickly, experiment often, and operate with tight budgets. While this agility is an advantage, it also increases the risk of overlooking critical ethical issues such as algorithmic fairness, user privacy, data protection, and model transparency. A single ethical mistake can lead to user mistrust, regulatory penalties, or loss of investor confidence.

This is why AI for Startups must balance rapid innovation with responsible practices. Ethical AI does not slow progress; it actually strengthens it by preventing future problems, reducing legal risks, and helping startups build solutions that can scale without harm. By prioritizing ethics from the beginning, startups create AI systems that are safer, more reliable, and better aligned with long-term business sustainability.

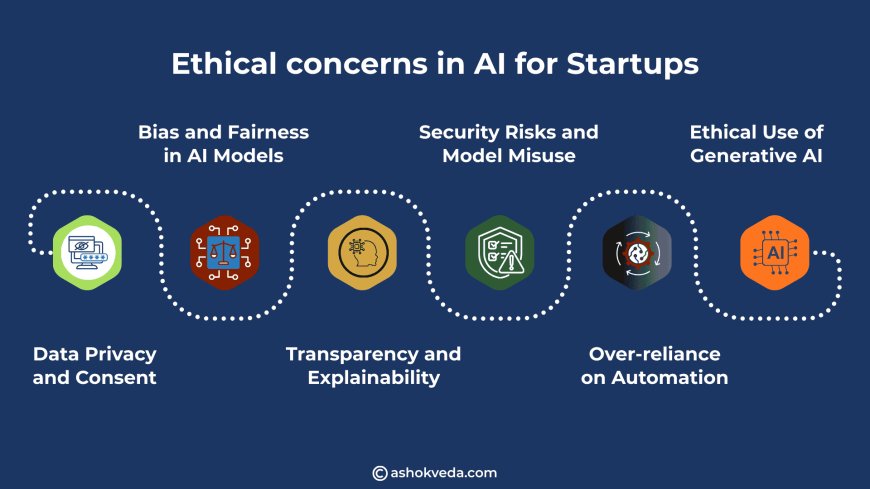

Ethical Concerns in AI for Startups

1. Data Privacy and Consent

Data is the fuel of AI. But collecting and using it without proper safeguards leads to several ethical challenges. Many users do not fully understand how their data is being used, especially when interacting with AI-driven apps.

Key ethical concerns

-

Collecting data without clear permission

-

Storing sensitive information insecurely

-

Sharing or selling data to third parties

-

Using personal data to train models without transparency

How Startups Can Improve

-

Use clear, simple consent forms

-

Limit data collection to what is absolutely necessary

-

Encrypt all user data

-

Delete user data upon request

-

Follow regulations like GDPR, CCPA, and India’s Digital Personal Data Protection Act

Strong data privacy practices help AI for Startups build trust, especially in industries like health, finance, and education.

2. Bias and Fairness in AI Models

Bias is one of the biggest risks in AI for Startups. It often comes from the data. If the data reflects historical biases, the AI system will repeat or even amplify those biases.

Common Examples

-

Loan approval models favoring certain income groups

-

Hiring tools rejecting qualified candidates due to gender or ethnicity

-

Facial recognition is working better for some skin tones

Why This Matters for Startups

Customers expect fairness. Investors and regulators increasingly check how a company handles bias. Biased models can lead to legal consequences and reputational damage, which can be devastating for early-stage companies relying on credibility.

How to Reduce Bias

-

Use diverse and representative datasets

-

Audit models regularly

-

Test outputs on multiple demographic groups

-

Document decisions behind model training

Fair and unbiased AI strengthens the impact of AI for Startups and ensures products work for everyone.

3. Transparency and Explainability

Users want to know how AI systems make decisions. Many models—especially deep learning systems—act like black boxes. When AI for Startups cannot explain decisions, it creates mistrust.

Key risks

-

Users feel manipulated

-

Customers cannot challenge incorrect decisions

-

Companies struggle to comply with regulations requiring explainability

How Startups Can Improve Transparency

-

Provide simple explanations of model logic

-

Offer clear reasoning for decisions affecting users

-

Maintain documentation for model building

-

Use interpretable models for high-stakes decisions

Explainability ensures that AI for Startups remains accountable and user-friendly.

4. Security Risks and Model Misuse

AI systems are attractive targets for cyberattacks. Hackers can manipulate training data, steal models, or exploit weaknesses. Many startups skip security due to time or budget constraints, which creates major ethical concerns.

Security challenges

-

Model theft (AI IP getting stolen)

-

Data poisoning attacks

-

Adversarial inputs (slightly altered data that tricks the model)

-

Weak access controls and exposed APIs

Security Best Practices

-

Use advanced authentication and access control

-

Monitor data pipelines

-

Add adversarial testing

-

Regularly update and patch systems

For AI for Startups, strong security is essential to protect both the company and its users.

5. Over-reliance on Automation

Automation can streamline processes, but over-dependence creates ethical challenges. Users may assume AI systems are always accurate, even when they make mistakes.

Examples of harmful over-reliance

-

Automated hiring tools rejecting qualified candidates

-

Chatbots giving incorrect medical or legal advice

-

Automated moderation failing to detect harmful content

Solutions

-

Keep humans in the loop for high-impact tasks

-

Set clear boundaries for what AI can and cannot do

-

Offer override options or appeal processes

Balancing automation with human judgment is essential for responsible AI for Startups.

6. Ethical Use of Generative AI

Generative AI—text, images, video, and code—comes with its own set of risks. As more founders adopt AI for Startups, they must use generative tools responsibly.

Ethical concerns

-

Misinformation or deepfakes

-

Copyright violations

-

Output that appears original but is based on someone’s intellectual property

-

Harmful, inappropriate, or misleading content creation

How Startups Can Handle Generative AI Safely

-

Use moderation filters

-

Provide clear disclaimers for AI-generated content

-

Train teams on copyright-safe practices

-

Evaluate all outputs before public release

Responsible use protects the reputation of both the startup and the larger ecosystem of AI for Startups.

Ethics is one of the strongest competitive advantages in the fast-growing world of AI for Startups. While speed and innovation are crucial, responsible development builds long-term success. Startups must focus on privacy, fairness, transparency, security, and accountability to create solutions that truly help people. By integrating ethical principles early, young companies can build AI that users trust, investors value, and the industry respects. In the future, the most successful AI for Startups will be those that combine breakthrough technology with responsible practices.