Understanding Security Issues in AI Consulting: Risks & Solutions

Explore the security issues in AI consulting and learn about the risks, challenges, and solutions to mitigate threats, ensuring secure AI implementation.

Artificial Intelligence (AI) continues to be a driving force behind digital transformation across industries. AI has already revolutionized business processes, from automating tasks to offering deeper data insights, and now it's deeply embedded in the consulting sector. According to a report, AI-driven solutions in consulting are expected to contribute over $100 billion to the global market by 2025. However, as AI becomes more prevalent, it also introduces a range of security issues that can significantly impact organizations, clients, and stakeholders.

While AI offers significant benefits, it also presents a new set of vulnerabilities. From data breaches to adversarial attacks on AI models, the security landscape is evolving. As AI-powered systems take on increasingly critical roles, securing them becomes paramount. This blog explores the security issues in AI consulting, discussing their implications and strategies to mitigate them.

Key Security Issues in AI Consulting

1. Data Privacy and Protection

AI systems, particularly those used in consulting, often rely on vast amounts of sensitive data to operate effectively. This data can include personally identifiable information (PII), financial records, healthcare data, and proprietary business insights. Handling such data introduces privacy concerns that need to be addressed with robust security measures.

-

Data Exposure: AI systems are at risk of unauthorized access, whether due to poor data protection practices, data leakage, or hacking attempts.

-

Regulatory Compliance: AI systems in consulting must comply with data protection laws, such as GDPR or CCPA, which impose strict requirements on how data is collected, stored, and processed. Non-compliance can lead to significant fines and reputational damage.

2. Vulnerabilities in AI Models

AI models, particularly machine learning algorithms, are vulnerable to various types of security threats. These vulnerabilities can compromise the integrity of AI systems, leading to potential security risks.

-

Adversarial Attacks: Malicious actors can manipulate AI models by introducing adversarial inputs—data specifically designed to mislead the AI into making incorrect predictions or decisions. These attacks can significantly disrupt the functionality of AI systems in critical applications.

-

Model Inversion: Through model inversion techniques, attackers can extract sensitive information from AI models by analyzing the model's outputs. For example, this could allow attackers to reconstruct confidential datasets.

-

Model Poisoning: If attackers can inject misleading or biased data into the training process, they can "poison" the AI model, causing it to behave incorrectly or produce biased outcomes.

3. Lack of Explainability and Transparency

A significant challenge in AI consulting is the "black box" nature of many AI systems. While AI models, especially deep learning models, have achieved impressive performance, their decision-making processes often remain opaque. This lack of transparency creates multiple risks:

-

Accountability Issues: In the event of an AI system failing or making a harmful decision, it becomes difficult to determine the cause of the issue or assign accountability, making it harder to address or prevent future issues.

-

Trust Deficit: Organizations and clients are often hesitant to fully adopt AI solutions without a clear understanding of how the system operates. Lack of explainability can lead to a loss of trust in the technology, potentially delaying AI implementation or undermining the potential benefits.

4. Insider Threats

While much focus is placed on external cyberattacks, insider threats—whether intentional or unintentional—remain one of the biggest security concerns in AI consulting.

-

Misuse of Data: Employees with access to sensitive data may use or share it maliciously for personal gain, leading to data breaches or intellectual property theft.

-

Unintentional Security Lapses: Even well-intentioned employees may inadvertently expose the organization to security risks due to a lack of understanding about proper data handling and security protocols.

5. Third-Party and Supply Chain Risks

Many AI consulting projects rely on third-party vendors for data, AI tools, cloud infrastructure, and even outsourced development. While these partnerships are critical to the AI ecosystem, they can also introduce additional risks:

-

Third-Party Vulnerabilities: If a third-party vendor's system is compromised, it could impact the security of the AI solution itself. For example, a cloud provider with inadequate security could lead to data breaches in AI-powered applications.

-

Supply Chain Attacks: Attackers can target weaker links in the supply chain to inject malicious code or data into the AI system, potentially compromising the entire consulting project.

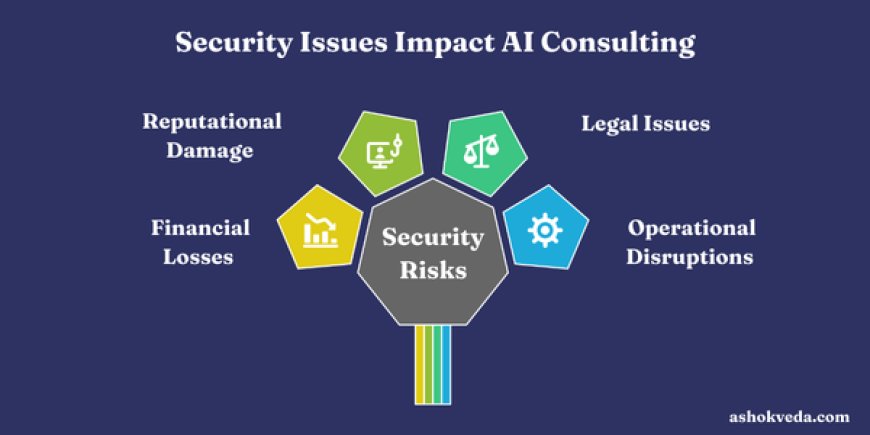

Implications of Security Issues in AI Consulting

The security risks associated with AI in consulting are not just theoretical—they can have serious, real-world consequences. Here's how these risks can impact businesses and stakeholders:

-

Financial Losses: Data breaches, system hacks, or fraudulent activities due to poor AI security can result in direct financial losses, including regulatory fines, class action lawsuits, or the cost of remediation efforts.

-

Reputational Damage: AI failures, such as incorrect predictions or biases, can damage the reputation of both consulting firms and their clients, eroding customer trust and credibility.

-

Legal and Regulatory Consequences: Organizations may face legal ramifications if their AI systems do not comply with local or international data protection laws.

-

Operational Disruptions: Security breaches can disrupt business operations, leading to downtime, lost productivity, and reduced operational efficiency.

Mitigating Security Risks in AI Consulting

Given the critical nature of security in AI consulting, organizations must take proactive steps to mitigate risks. Below are some key strategies for ensuring AI system security:

1. Robust Data Protection Measures

-

Encryption: Implement strong encryption protocols to secure sensitive data both at rest and in transit.

-

Access Controls: Use role-based access controls (RBAC) to limit who can access sensitive data and AI systems.

-

Data Anonymization: Where possible, anonymize data to minimize the risk of exposing personal information in the event of a breach.

2. Enhancing AI Model Security

-

Adversarial Defense: Train AI models to resist adversarial inputs, making them more resilient to attack.

-

Model Auditing: Conduct regular audits of AI models to detect vulnerabilities and biases.

-

Secure Training Practices: Ensure that training datasets are free from bias and tampering, and regularly refresh training data to keep models accurate and secure.

3. Promoting Transparency and Explainability

-

Explainable AI (XAI): Develop AI systems that are transparent and capable of explaining their decisions in a human-understandable way.

-

Clear Documentation: Maintain thorough documentation of AI models, including their training data, algorithms, and decision-making processes.

-

Stakeholder Involvement: Involve stakeholders throughout the AI implementation process to ensure transparency and foster trust in the system.

4. Preventing Insider Threats

-

Employee Training: Regularly train employees on best practices for data security and the ethical use of AI systems.

-

Behavioral Monitoring: Use behavioral analytics to monitor employee activity and detect any suspicious behavior or unauthorized access.

-

Data Use Policies: Establish clear guidelines for data access and usage to minimize the chances of misuse.

5. Managing Third-Party Risks

-

Vendor Security Assessments: Evaluate the security practices of third-party vendors before working with them and periodically reassess them.

-

Third-Party Contracts: Ensure that contracts with third-party vendors include security clauses and responsibilities for data protection.

-

Monitoring Supply Chains: Continuously monitor third-party systems and services to ensure they maintain high security standards.

The Role of AI in Strengthening Security

Ironically, AI itself can be a powerful tool in enhancing security. Here are a few ways AI can be used to mitigate security risks:

-

Threat Detection: AI-powered security systems can analyze large volumes of data to identify and respond to potential threats in real-time.

-

Predictive Analytics: AI can predict potential vulnerabilities or breaches based on historical data, allowing businesses to take proactive measures.

-

Automated Incident Response: AI can automate security responses, reducing the time it takes to mitigate attacks and minimizing damage.

While AI consulting offers immense potential to drive business innovation, it also introduces significant security risks that must be carefully managed. From data privacy concerns to model vulnerabilities, the security issues in AI consulting require a comprehensive approach that includes robust data protection, transparent AI models, and careful management of insider and third-party risks. As AI continues to evolve, so too will the strategies needed to secure these systems. By taking proactive steps to address security issues, businesses can ensure that AI not only enhances their capabilities but does so in a secure and trustworthy manner. Ensuring the security of AI systems is not just a technical requirement—it is a critical step in building sustainable, ethical, and successful AI-powered businesses.