Navigating the Key Challenges in AI for Startups

Discover the top challenges in AI for startups in 2025, including funding, talent, data, compliance, and strategies to overcome them for success.

AI has transformed from a niche technology into a core driver of innovation across industries. Global investments in AI startups have surged, with billions flowing into companies developing machine learning models, automation tools, and industry-specific solutions. Yet despite this boom, many startups face a steep uphill battle. High compute costs, scarce talent, messy data, and complex regulatory landscapes make building a sustainable AI product far more challenging than just coding a clever algorithm. Evidence from multiple industry analyses shows rapid adoption and heavy investment in AI, but also an ecosystem where the winners require more than a clever algorithm — they need engineering muscle, deep domain data, governance practices, and pragmatic go-to-market plans.

Success requires navigating technical, operational, and business obstacles simultaneously. Founders must balance innovation with compliance, infrastructure costs, and measurable business outcomes. In this post, we’ll explore the Challenges in AI for Startups, backed by 2025 data and insights, and provide actionable strategies to turn these constraints into competitive advantages that drive growth and long-term success.

Challenges in AI for Startups

1. Money talks: funding is abundant — but concentrated and conditional

Why it’s a challenge: Venture capital and private investment in AI have ballooned, but the capital is increasingly concentrated in later-stage “foundation model” plays and a handful of headline-grabbers. That means early-stage teams building vertical applications or tooling often face tighter scrutiny, a higher bar for traction, and pressure to show revenue or defensible moats quickly. Cash is available, but expectations are higher: investors want measurable adoption, economics, or IP that justify follow-on rounds.

What startups feel: Founders report term sheets that demand faster scaling, clearer unit economics, and less speculative R&D. Meanwhile, rising valuations at the top pull talent and bidders away from smaller companies.

Tactics: Focus on capital-efficient milestones: revenue, paid pilots, churn metrics, and a clear path to gross margin. Use non-dilutive options (grants, strategic partnerships, customer-funded pilots) to buy runway while you demonstrate repeatable demand. Consider staged product-roadmap investments that postpone heavy compute spending until product-market fit is proven.

2. Talent scarcity and hiring math

Why it’s a challenge: The demand for experienced ML/AI engineers, data scientists, MLops engineers, and AI product managers far outstrips supply. Startups compete with FAANG-scale companies, deep-pocketed AI labs, and unicorns for a shallow pool of senior talent. This labor shortage drives salaries up and extends time-to-hire — both painful when you need engineers to productionize models fast. Recent analyses show the AI talent gap remains acute and is driving wage inflation and hiring bottlenecks.

What startups feel: The “perfect” candidate rarely exists; hiring cycles drag on, and hiring juniors without the right mentoring or org DNA leads to slower progress and fragile systems.

Tactics.

-

Hire for transferable engineering excellence, not just model papers. Strong software-engineering skills plus curiosity often beat narrow academic credentials.

-

Use contractor networks, part-time specialists, and vetted consultancy partners to bridge gaps without long-term payroll commitments.

-

Invest in tooling and automation that augments fewer engineers (MLOps platforms, CI/CD for models, reproducible training pipelines).

-

Build apprenticeship programs: hire more juniors and invest short-term to gain longer-term retention and cultural fit.

3) Compute and infrastructure costs are violently non-linear

Why it’s a challenge. Training and serving modern models can be extremely expensive. Top-tier, frontier models have training costs that can scale into the tens or hundreds of millions for the largest architectures; serving at scale also requires specialized hardware and engineering. Even fine-tuning or running LLMs for production features can create runaway cloud bills if not carefully architected. Multiple industry reports highlight rapidly growing compute costs as a major barrier for smaller teams.

What startups feel. Surprise billing, poor cost predictability, and time lost tuning infra decisions. Founders sometimes lose product focus when “keeping the lights on” consumes engineering cycles.

Tactics.

-

Architect for cost-awareness from day one: choose batching, quantization, and model distillation techniques to reduce inference cost.

-

Use hybrid approaches: on-device, edge, or server-side lightweight models for latency-sensitive features; route heavy workloads for offline or batch processing.

-

Explore spot instances, committed-use discounts, and specialized AI cloud providers with transparent pricing.

-

Build A/B tests that measure usage impact on revenue before committing to large-scale serving.

4) Data: the fuel is messy, private, and expensive to get right

Why it’s a challenge. High-quality labeled data is the lifeblood of reliable models. But data is often siloed, inconsistent, or legally restricted. Startups face three core data problems: access, labeling quality, and governance (consent, privacy, lineage). Without curated, high-quality datasets, models deliver brittle or biased outputs that ruin user trust and product credibility.

What startups feel. Releasing early products with poor data can backfire: mispredictions, privacy incidents, or biased outputs erode trust and make customer adoption harder.

Tactics.

-

Prioritize data partnerships and customer-funded annotation projects (customers pay for the model improvements they need).

-

Invest in small-but-high-quality labeled datasets for the core use-case before scaling to massive, noisy corpora.

-

Implement robust data governance: consent capture, versioning, lineage, and audit trails. This matters for compliance (see the regulation section).

-

Use synthetic data carefully — it can accelerate development but requires validation to avoid hallucination or distribution mismatch.

5) Product-market fit for AI is harder than a demo

Why it’s a challenge. A flashy demo attracts attention, but a product that customers will actually pay for needs clear ROI, integration patterns, and reliability. AI features that save minutes per task or reduce error rates often require a deep understanding of customer workflows and change management.

What startups feel. Many companies pivot between promising demo use-cases but struggle to find repeatable sales motions or measurable business outcomes.

Tactics.

-

Start with narrow vertical problems with measurable KPIs (reduction in time, cost savings, or improved conversion rates).

-

Build strong UX around model outputs: show confidence scores, provenance, and easy ways for users to correct or override AI outputs.

-

Offer pilot projects with KPIs and clear evaluation criteria rather than vague “proofs of concept.”

-

Price based on value delivered (outcome-based pricing), not just API calls or compute.

6) MLOps, deployment, and lifecycle maintenance

Why it’s a challenge. Models degrade, data shifts, and dependencies change. Deploying a model to production is only the beginning — continuous monitoring, retraining, and governance require robust MLOps practices. Many startups underestimate the engineering lift to maintain model quality in production.

What startups feel. “It worked in my notebook” syndrome: the model performs offline but breaks in production due to input drift, unhandled edge cases, or scaling issues.

Tactics.

-

Implement automated monitoring for data drift, performance regression, and latency alerts.

-

Adopt reproducible pipelines (infrastructure-as-code, data versioning, model registries).

-

Create retraining schedules and canary deployment patterns to limit the blast radius of failing updates.

-

Invest in unit and integration tests for model behavior, not just infrastructure.

7) Governance, explainability, and compliance

Why it’s a challenge. Regulators are moving fast. The EU AI Act and similar frameworks place obligations on providers and deployers of “high-risk” AI systems. Compliance requires documentation, risk assessments, explainability, and safety casework — all of which add operational overhead and product constraints. Startups must prepare evidence for audits and demonstrate responsible design practices.

What startups feel. A compliance burden that can slow product launches and increase legal/engineering costs.

Tactics.

-

Build compliance into product development (privacy-by-design, documentation, mitigation strategies).

-

Keep clear model provenance: data sources, training specs, hyperparameters, and validation results.

-

Add human-in-the-loop checks for higher-risk decisions and maintain logs for downstream audits.

-

Consult compliance and legal experts early; it’s cheaper to design for compliance than to retrofit.

8) Ethics, fairness, and trust issues

Why it’s a challenge. Biases in training data or flawed objective functions can cause discriminatory outcomes. For startups serving regulated sectors (healthcare, finance, HR), one mistake can cause reputational damage and legal liability.

What startups feel. Fear of negative press, loss of customers, or legal exposure. In practice, building ethically robust systems requires domain expertise, annotation diversity, and rigorous validation.

Tactics.

-

Run fairness audits, include diverse annotators, and stress-test models across demographic slices.

-

Be transparent with users about model limitations and remediation paths.

-

Prioritize use-cases where the error cost is low or easily remediable while refining high-risk models.

9) Security, adversarial attacks, and model misuse

Why it’s a challenge. AI systems are susceptible to adversarial inputs, model extraction, and data poisoning. Startups must architect defenses for production systems that can be attacked by sophisticated actors.

What startups feel. A persistent anxiety about safeguarding IP and user data while keeping models accessible.

Tactics.

-

Harden APIs with rate limits, anomaly detection, and request authentication.

-

Monitor for extraction patterns and throttle unusual usage.

-

Use differential privacy, secure enclaves (if needed), and encryption for sensitive datasets.

10) Competition, commoditization, and the “platform vs vertical” tradeoff

Why it’s a challenge. Horizontal model capabilities are increasingly commoditized through open-source models and big cloud providers’ APIs. Startups must either compete on massive model scale (expensive) or differentiate via vertical specialization, integration, and domain expertise.

What startups feel. Pressure from both well-funded platform companies and low-cost open-source alternatives.

Tactics.

-

Focus on domain depth: proprietary datasets, workflows, and compliance that are hard to replicate.

-

Build tight integrations with customer stacks (CRM, ERP, EHR) that increase switching costs.

-

Consider hybrid strategies: leverage open models for core capabilities and layer proprietary fine-tuning or rules for differentiation.

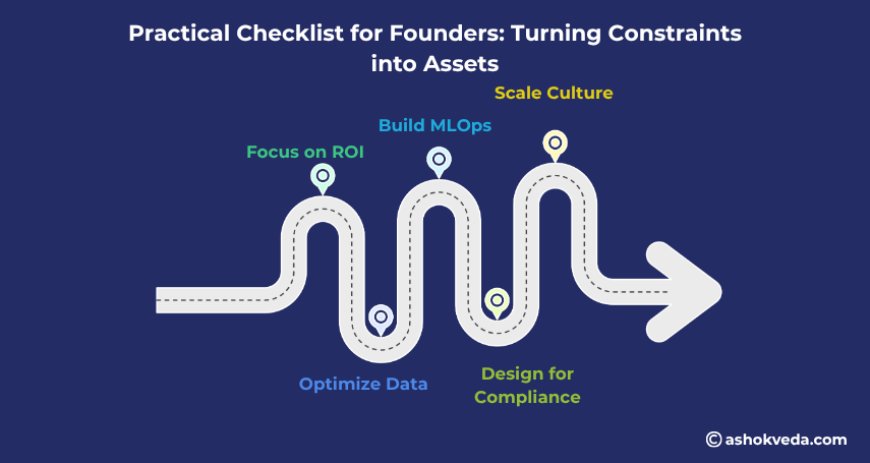

Practical Checklist for Founders: Turning Constraints into Assets

-

Focus on measurable ROI use-cases – Start with narrow problems where AI delivers a clear business impact, and define KPIs from day one.

-

Optimize data and infrastructure early – Invest in high-quality, governed datasets and cost-efficient compute pipelines to avoid surprises later.

-

Build robust MLOps and monitoring – Automate training, deployment, and performance tracking to maintain reliable production models.

-

Design for compliance, ethics, and trust – Integrate governance, human oversight, and explainability to reduce risk and improve adoption.

-

Hire strategically and scale culture – Prioritize versatile engineering talent, mentorship, and team retention to navigate scarcity and sustain growth.

The Challenges in AI for Startups are real and structural, but they’re not insurmountable. The projects that succeed combine domain focus, capital efficiency, engineering rigor, and a candid approach to ethics and governance. The companies that win won’t simply have better models — they’ll have better data agreements, repeatable pilots, resilient infra, and distribution channels that turn technical novelty into durable value. If you’re building an AI startup in 2025, treat constraints as design inputs: compute budgets become architecture choices; regulation becomes a trust signal; scarce talent forces better tooling and mentorship. The path is narrow but navigable — and the winners will shape how entire industries use AI responsibly and profitably.