Security Issues in AI for Startups: Risks and Protection Strategies

Understand the key security risks of AI for Startups, including data breaches, model attacks, and compliance challenges, with practical strategies to stay protected.

AI has become a powerful growth driver for modern startups, helping them automate processes, improve customer experiences, and make smarter decisions. However, as AI adoption accelerates, so do the security risks that come with it. Many early-stage companies are quick to implement AI tools but often overlook the vulnerabilities hidden within data pipelines, machine learning models, and third-party integrations.

These risks can expose sensitive information, disrupt operations, or damage customer trust. For startups working with limited resources, even a small security breach can lead to major financial and reputational losses. Understanding the security issues in AI for Startups is essential for building safe, reliable, and scalable AI systems that support long-term business growth.

Why AI Security Matters for Startups

As AI for Startups expands across industries—from fintech and e-commerce to healthcare and SaaS—security threats grow in complexity. Startups depend heavily on automation and data-driven tools, often integrating AI models into customer interactions, analysis workflows, and product features. But most founders underestimate the risks associated with poorly secured AI systems.

Data leaks, model manipulation, unauthorized access, and biased decision-making can permanently damage a startup’s trust and reputation. As cyberattacks evolve, securing AI models is no longer optional—it is a business necessity. The lack of strong AI security can slow down scaling, push away investors, and expose sensitive customer data.

Understanding Security Issues in AI for Startups

To create safe and reliable AI for Startups, founders must understand the major security challenges. Below are the core issues that affect AI systems, along with their implications.

1. Data Vulnerabilities and Unauthorized Access

AI systems are only as secure as the data they use. When training data is stolen, tampered with, or exposed, the entire AI model becomes unreliable.

Common risks include:

-

Sensitive customer data is being accessed by attackers

-

Breaches during data collection, storage, or transfer

-

Unauthorized access to training databases

-

Missing encryption for datasets

Startups generally rely on cloud storage, making them prime targets for cybercriminals. One weak configuration or unsecured API can open the door to massive data leaks.

2. Model Manipulation and Adversarial Attacks

AI models can be tricked into producing false results when faced with manipulated input. These adversarial attacks are especially dangerous for startups using AI in:

-

fraud detection

-

identity verification

-

recommendation systems

-

predictive analytics

Attackers can subtly alter input data to mislead models. For example:

-

A fraudster slightly changes transaction patterns to bypass a fraud detection AI

-

A hacker modifies images so facial recognition fails

-

A malicious competitor feeds misleading patterns to weaken your model’s accuracy

These attacks are hard to detect because models behave normally under most conditions.

3. Bias and Discrimination in AI Decisions

Security in AI for Startups is not only about preventing attacks—it is also about protecting fairness and trust.

Biased training data can produce unfair outcomes, such as:

-

rejecting the wrong job applicants

-

favoring certain customers

-

inaccurate credit risk predictions

-

discriminatory recommendations

For a startup trying to build trust, biased AI can be catastrophic. It can lead to legal issues, compliance failures, and ethical concerns.

4. Insecure Third-Party Integrations

Many startups use pre-trained AI APIs or third-party tools because building everything in-house is expensive. However, this convenience introduces risks:

-

Vulnerable third-party code

-

Outdated libraries

-

Insecure APIs

-

Lack of compliance checks

If the external service gets breached, your startup is exposed too.

5. Lack of Security Expertise in Growing Teams

Most startups adopt AI before building the right security culture. Teams often prioritize speed and features over safety.

This leads to:

-

weak authentication practices

-

poor code reviews

-

lack of encryption

-

No monitoring for suspicious activities

-

insufficient documentation

Without strong security talent, AI systems become easy targets.

6. Model Theft and Intellectual Property Loss

Competitors or malicious actors can attempt to steal:

-

your training data

-

model architecture

-

proprietary algorithms

-

learned parameters

This is a major concern for startups where the AI model is the product.

Model theft damages competitive advantage and affects valuation, making it a direct threat to business survival.

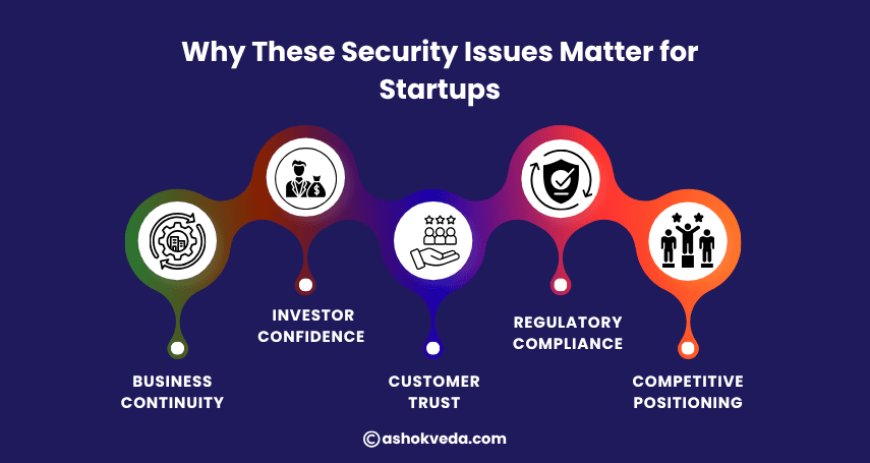

Why These Security Issues Matter for Startups

Startups relying on AI cannot afford failures in security. Every security gap impacts:

Business continuity

A single breach can interrupt operations and damage long-term growth.

Investor confidence

Investors expect AI for Startups to be secure, compliant, and scalable. Security failures erode trust.

Customer trust

Users want to know their data is safe. One public incident can lead to customer churn.

Regulatory compliance

Poor AI security may violate:

GDPR

ISO standards

IT Act of India

Industry-specific compliance frameworks

Security-focused startups stand out as more reliable and investor-ready.

Common Real-World Scenarios Where Startups Face AI Security Issues

To understand the seriousness of AI security concerns, here are realistic scenarios:

-

A healthcare startup using AI misconfigures a database, resulting in the leakage of sensitive patient data.

-

A fintech startup’s loan approval model gets manipulated by adversarial inputs, causing financial loss.

-

A SaaS startup utilizes an outdated AI API that is hacked, resulting in unauthorized access.

-

A retail startup trains a recommendation AI with biased data, causing unfair customer experiences.

These incidents highlight the importance of proactive security design.

How Startups Can Strengthen the Security of AI Models

Here are actionable strategies to secure AI for Startups and reduce risks.

1. Secure Data Collection and Storage

-

Use encryption for all datasets

-

Implement strong access control

-

Store data only on secure cloud platforms

-

Conduct regular audits

2. Protect Models Against Adversarial Attacks

-

Use adversarial training

-

Add input validation layers

-

Test models against manipulated data

-

Monitor unexpected output behavior

3. Ensure Fairness and Reduce Bias

-

Use diverse and representative datasets

-

Conduct regular bias testing

-

Include explainable AI practices

-

Involve domain experts in model review

4. Validate Third-Party AI Tools

-

Choose vendors with strong security certifications

-

Review API documentation

-

Update libraries frequently

-

Avoid poorly supported open-source models

5. Build an AI Security Culture

-

Train developers in AI security fundamentals

-

Create a secure development lifecycle

-

Set strict authentication and password policies

-

Encourage regular security testing

6. Protect Intellectual Property

-

Use model watermarking

-

Protect export endpoints

-

Limit model access

-

Secure code repositories

AI for Startups offers unmatched advantages, but without proper security, the risks can outweigh the benefits. Startups must prioritize cybersecurity from day one, not as an afterthought. By securing data, protecting models, reducing bias, and strengthening team awareness, founders can build trustworthy, resilient, and scalable AI systems. A secure AI foundation not only protects the business but also boosts investor confidence, improves customer trust, and positions the startup for long-term success. In the age of digital innovation, security is not just a feature, it is your competitive edge.