Future of AI for Startups: Trends, Opportunities & Challenges

Explore how AI is reshaping startups in 2025 with emerging trends, challenges, and opportunities driving the future of AI for startups.

Artificial intelligence (AI) is no longer a futuristic concept—it’s a central driver of business innovation, operational transformation, and startup disruption. Consider this: the global AI market is estimated to be worth USD ~$638 billion in 2025, and projected to grow to around $3.68 trillion by 2034. Meanwhile, venture funding is pouring into AI startups: in the first half of 2025, U.S. startup funding surged by ~75.6%, much of it driven by AI-focused companies. Yet despite this explosion, many companies struggle to convert AI adoption into measurable value—leaving the space wide open for startups that can move fast, experiment smartly, and embed AI not just as a feature but as the core of their business. As founders and teams look ahead, the future of AI for startups is being forged now—through decisions about data, architecture, business models, and ethics.

Why 2025 Marks a Defining Moment for AI Startups

Several converging signals make 2025 a watershed year for AI innovation and startup opportunities:

-

Accessible infrastructure and models: Foundation models for text, vision, and multimodal tasks are now broadly available or affordable, lowering the barrier for startups to experiment and deliver.

-

Enterprise readiness and adoption pressure: Surveys suggest that a very high proportion of companies now adopt AI in some form, though only a smaller fraction derive full business impact. For example, analysts indicate generative AI spending may exceed USD $644 billion in 2025—a jump of ~75% year over year.

-

Massive funding and startup ecosystem momentum: AI startups continue to claim a large share of venture investment—one report suggests AI captured ~46.4% of all U.S. startup funding in 2024.

-

The gap between adoption and value: While adoption is high, many companies still struggle with ROI. That means startups that can close the gap between technology and business outcome will win.

-

Regulatory and competitive inflection: Businesses are facing pressure not only to build AI, but to build it responsibly, with privacy, bias mitigation, and governance baked in. Startups that begin with these constraints in mind will gain trust, speed, and differentiation.

The Future of AI for Startups: Key Trends Defining the Next Decade

Here are the major trends that will shape the future of AI for startups from 2025 onward:

1. Verticalised and domain-specific AI solutions

The era of “general purpose AI” is maturing. While foundation models—large language models, vision models, etc.—are accessible, the real value lies in verticalizing: tailoring models and systems to specific industries (healthcare, legal, logistics, agriculture) and workflows. Startups that specialize in domain expertise, proprietary data, workflow integrations, and vertical compliance will create defensibility far beyond generalists.

2. AI as workflow, not merely a feature

Rather than adding an “AI module,” tomorrow’s startups will embed AI into end-to-end workflows: sensing, decision-making, and action. The best products will reduce time-to-decision, automate key tasks, or influence user behavior. For a startup, the focus should shift from “we added AI” to “our product transforms how users act.”

3. Infrastructure, MLOps, and production maturity

Building models is only the start—scaling them reliably, monitoring drift, and optimizing cost and governance are the real obstacles. A growing domain of startups will focus on tooling for model orchestration, governance, feature stores, data lineage, inference optimization, and secure data sharing. Founders who invest early in production readiness will avoid the common pitfalls of model abandonment.

4. Democratization of AI: low-code, no-code, and citizen builders

AI is becoming accessible not just to ML experts but to domain specialists and non-technical users via low-code/no-code platforms. This democratization means new entrants can build faster but also means more competition. Startups that provide domain-specific templates or enable citizen-AI creation will gain traction—but they must balance ease with robustness.

5. Ethics, transparency, and regulatory readiness

As AI becomes pervasive, trust matters. Models must be transparent, auditable, and bias-aware. Regulations—such as the EU AI Act and emerging frameworks globally—will impose requirements around safety, explainability, and data.

Startups that embed these values early will gain enterprise trust and unlock regulated verticals (finance, healthcare, and legal) that many generalists cannot.

6. Business model evolution: outcome-based pricing and human-in-the-loop

Rather than simply charging per API call or per user, AI startups will increasingly adopt outcome-based pricing (you pay for saved hours, improved accuracy, or revenue lift) and human-in-the-loop/hybrid models for high-value tasks.

This alignment between client ROI and startup pricing creates stronger partnerships and higher retention.

7. Edge, federated, and decentralized AI

As compute moves closer to the data source, startups will leverage edge AI, federated learning, and privacy-preserving architectures—especially in domains such as IoT, mobile, industrial sensors, and regulated data.

This means models aren’t just in the cloud—they’re embedded, local, and privacy-aware.

Challenges on the Road Ahead

No matter how attractive the opportunities, startups must navigate significant risks and headwinds in the future of AI.

1. Capital intensity and valuation risk

Training large models and deploying at scale is expensive. While funding is available, it’s increasingly disciplined. Startups must show viable business models and clear paths to revenue.

According to one survey, even “AI supernova” startups that grew to ~$40 M ARR in year one sometimes had gross margins around 25%—indicating distribution-heavy growth. Misalignment between hype and business reality can lead to valuation corrections.

2. Data access, quality, and scale

AI thrives on data—but high-quality labeled data remains scarce. Startups may need to invest heavily in data collection, annotation, synthetic generation, or partnerships. Poor data means weak models.

3. Talent shortage and rising cost

AI talent (ML engineers, data scientists, and MLOps specialists) remains in high demand. Startups must hire smart but also build leverage: invest in tooling, reuse code, and avoid bloated teams until scale demands it.

4. Measuring business impact vs. model metrics

One of the biggest pitfalls is optimizing for model accuracy rather than business outcome. Model performance (e.g., 90% accuracy) doesn’t matter if it doesn’t change user behavior or produce value.

Startups must tie AI metrics (accuracy, precision, latency) to business KPIs (conversion lift, cost savings, retention).

5. Ethical, privacy, and regulatory risk

AI systems can produce biased, unfair, or opaque results. Compliance requirements are expanding. If a startup cannot guarantee transparency, interpretability, or fairness, it may face regulatory or reputational backlash.

A recent MIT study (though proprietary) suggests many generative AI initiatives still struggle to deliver value—even when funding is large.

6. Competitive saturation and commoditisation

As foundation models become commoditized, differentiation becomes harder. Many easy gains have been captured; remaining gains demand domain expertise, defensible data, and workflow integration.

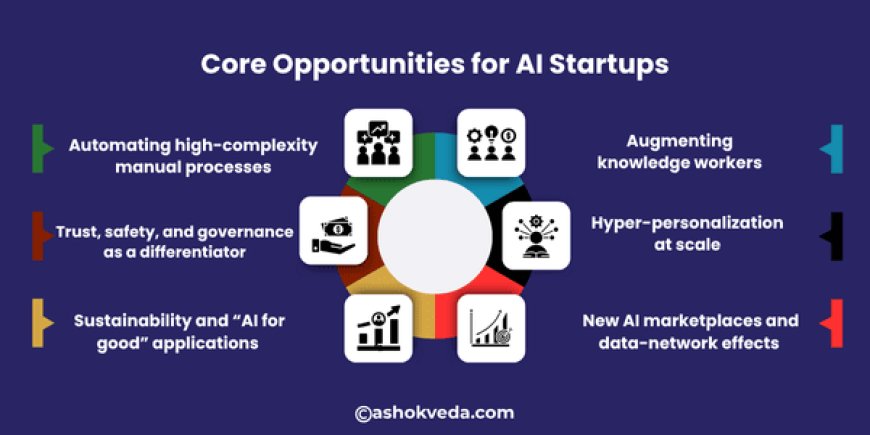

Core Opportunities for AI Startups in 2025 and Beyond

If you’re building a startup, here are some of the most promising opportunity areas for AI:

A. Automating high-complexity manual processes

Many industries (legal, healthcare, insurance, logistics) still rely on costly, error-prone manual processes. Startups can build AI systems that reduce turnaround times, improve accuracy, and reduce human hours.

Example: automating claims processing with document understanding and anomaly detection yields measurable ROI.

B. Augmenting knowledge workers

Rather than replacing human knowledge workers, AI can dramatically amplify them—analysts, marketers, designers, and engineers. AI copilots, research assistants, and automated ideation engines deliver productivity gains and can become stickier over time.

Example: an AI marketing assistant that drafts campaigns, optimizes media spend, and learns from marketer edits.

C. Hyper-personalization at scale

Customer expectations are rising. Startups can offer AI-driven personalization (product recommendations, content, and learning paths) at scale by combining real-time data, ML models, and orchestration.

Example: a personalized learning platform that adapts in real-time to a learner’s behavior and preferences.

D. Trust, safety, and governance as a differentiator

As enterprises adopt AI, concerns around bias, compliance, and transparency grow. Startups that offer “AI with governance baked in” (audit logs, interpretability, and model lineage) will win in regulated markets.

Example: an AI platform that provides automatic bias detection, interpretability dashboards, and logging for compliance teams.

E. Sustainability and “AI for good” applications

AI can help optimize energy grids, improve supply-chain efficiency, reduce waste, monitor climate, and more. Investors are increasingly funding “tech for good” startups.

Example: an AI platform that predicts energy consumption, optimizes industrial processes, and reduces carbon footprint.

F. New AI marketplaces and data-network effects

As startups capture vertical data and user interactions, they can build network effects—data improves the model, the model improves the product, and the product attracts more users. Data-driven moats are powerful in AI.

Example: a niche industry platform collecting usage data to refine models and create a defensible position.

AI and Regulation: Building for a Responsible Future

The future of AI for startups is not just technological—it’s also fundamentally ethical and regulatory. Building responsibly is no longer optional—it’s a competitive advantage.

Why responsibility matters

-

Enterprise trust: Large customers increasingly seek audit trails, fairness assurances, data lineage, and governance.

-

Regulatory compliance: Emerging regulations (e.g., EU AI Act) focus on high-risk AI systems, transparency, bias mitigation, and data protection. Startups in verticals like finance or healthcare may require certifications or auditability.

-

Reputation & risk mitigation: Biased models, hallucinations, and poor user outcomes lead to legal and reputational risk. Startups must adopt safe deployment practices.

Building the right foundation

Startups should consider:

-

Privacy by design: Collect minimal personal data, anonymize, and facilitate opt-in/opt-out.

-

Model cards & documentation: Provide transparency on what the model does, what it doesn’t, training data, and evaluation metrics.

-

Monitoring & auditability: Deploy systems that track inputs/outputs, drift, bias, and log user feedback.

-

Human-in-the-loop/escalation: For critical decisions (health, legal), ensure a human review layer.

-

Ethics governance board: Even a small startup should designate responsible persons to monitor AI impacts, fairness, and risk.

-

Regulatory road-mapping: If targeting regulated sectors, factor in certification, auditing, third-party review, and compliance documentation from the product design stage.

Startups that embed these practices early can turn regulatory readiness into customer trust, faster sales, and fewer unforeseen risks.

The Next Decade: What the Future Holds

Looking forward to 2030 and beyond, what might the future of AI for startups look like?

AI-native startups everywhere

By 2030, nearly every startup will embed AI — not as an “add-on” but as intrinsic to product design, operations, and user experience. Startups in non-tech verticals (construction, agriculture, education, and manufacturing) will increasingly become “AI startups.”

Edge and decentralized intelligence

AI will move beyond the cloud: edge inference, local models on devices, federated learning, and privacy-preserving architectures will proliferate. Startups that build for offline, local, low-latency, and privacy-sensitive contexts will have an advantage.

Agentic and autonomous systems

AI agents that can reason, act, and adapt without constant human direction will become mainstream. Startups building autonomous workflows (cyber defense, logistics orchestration, and financial traders) will scale rapidly.

Data-network effects and ecosystems

As more enterprises adopt AI, large platforms will host ecosystems of AI-driven modules. Startups will increasingly plug into those ecosystems or build data-network effect moats of their own.

The future will favor those who build platforms, not just features.

Regulation and global-AI architecture

The global regulatory landscape will mature: AI certification, cross-border data governance, and model marketplace oversight. Startups will need to comply early or risk being blocked from enterprise deals.

Regions such as Europe and India will push localized AI sovereignty, data residency, and trust frameworks—creating opportunities for startups that localize models to specific markets.

Sustainability, impact, and inclusive AI

AI will be central to tackling climate change, energy optimization, and inclusion (education, health access). Startups that combine commercial value with societal impact will attract both customers and investors.

AI for good will no longer be a “nice to have”; it will be a core dimension of business value and differentiation.

The future of AI for startups is rich with promise—and complex. In 2025, the winds are favorable: infrastructure is accessible, funding is abundant, and demand is rising—but execution still separates winners from the field. Startups that build verticalized, workflow-embedded AI solutions; that tie technology to measurable business outcomes; and that invest early in infrastructure, governance, and go-to-market will define the next wave of growth. At the same time, those who ignore the ethics, data, regulation, or business model dimensions risk being commoditized or displaced. For founders, the game is clear: start with the problem, obsess over the user workflow, design for measurable impact, build infrastructure and data moats, price for outcomes, and embed responsibility into your culture from day one.