Data Requirements for AI Consulting: Key Insights & Best Practices

Explore the data requirements for AI consulting. Learn about data types, quality, governance, and best practices for AI success in 2025 and beyond.

In the swiftly developing field of artificial intelligence, data has emerged as the backbone of AI-driven solutions. According to recent statistics, enterprises investing in AI projects are expected to manage over 175 zettabytes of data globally, highlighting the growing importance of structured, high-quality data in achieving tangible outcomes. For businesses aiming to leverage AI effectively, understanding the data requirements for AI consulting is no longer optional—it is a strategic necessity.

Organizations engaging AI consultants are often surprised to learn that the success of AI projects hinges not just on algorithms or tools, but on the availability, quality, and structure of data. Poor data can lead to inaccurate models, biased predictions, and ultimately, wasted resources. In this blog, we’ll explore in depth the types of data, collection strategies, quality standards, and governance practices essential for successful AI consulting.

Understanding Data Requirements in AI Consulting

AI consulting is more than just implementing machine learning models. It involves a strategic approach to collecting, curating, and preparing data so that AI systems can generate meaningful insights. Consultants work closely with businesses to understand their goals and then define the data requirements for AI consulting based on these objectives.

Key Elements of Data Requirements

-

Volume: How much data is needed to train AI models effectively?

-

Variety: What types of data—structured, semi-structured, or unstructured—are required?

-

Velocity: How frequently does the data need to be updated or streamed for real-time analysis?

-

Veracity: How reliable, accurate, and unbiased is the data?

Consultants often use the “4 Vs of Data” framework (Volume, Variety, Velocity, Veracity) to define the requirements for AI solutions. Let’s explore each of these in detail.

1. Volume of Data

AI models, especially deep learning networks, require vast amounts of data to achieve high accuracy. For instance, natural language processing (NLP) models for customer service chatbots often rely on millions of conversational datasets. Similarly, predictive analytics models for supply chain optimization need years of historical operational data.

Considerations for Volume:

-

The size of historical data necessary for model training.

-

The balance between data quantity and model performance; sometimes, more data doesn’t equate to better outcomes if quality is poor.

-

Storage and computational capabilities are required to process large datasets.

2. Variety of Data

AI solutions thrive on diversity. Data can be:

-

Structured: Tabular data in databases, spreadsheets, or ERP systems.

-

Semi-structured: JSON, XML, or log files.

-

Unstructured: Images, audio, video, emails, social media posts, sensor data.

Understanding the variety of data ensures that AI models can handle real-world complexities. For example, a healthcare predictive model may need structured patient records, semi-structured lab results, and unstructured medical imaging data.

3. Velocity of Data

Many AI applications, especially in finance, e-commerce, or real-time analytics, require continuous data updates. High-velocity data streams enable AI models to deliver insights in near real-time.

Considerations for Velocity:

-

Real-time data ingestion pipelines for continuous learning.

-

Handling batch vs. streaming data based on business needs.

-

Ensuring low-latency processing for time-sensitive AI applications.

Data quality is critical. AI systems trained on inaccurate, incomplete, or biased data produce unreliable predictions. Ensuring veracity involves:

-

Data cleaning to remove inconsistencies, duplicates, and errors.

-

Standardization and normalization of datasets.

-

Bias detection and mitigation to promote fairness and ethical AI use.

According to a 2025 survey, 78% of AI failures are linked to poor data quality, making veracity a top priority in AI consulting engagements.

Data Sources for AI Consulting

AI consultants work with multiple sources of data. Identifying the right sources is a crucial step in defining data requirements. Key data sources include:

-

Internal Business Data: ERP, CRM, sales, inventory, and operational systems.

-

Public Datasets: Open-source datasets like Kaggle, UCI Machine Learning Repository, and government databases.

-

Third-Party Data Providers: Market research reports, industry analytics, social media feeds.

-

IoT and Sensor Data: Data from connected devices in manufacturing, healthcare, or smart cities.

Data Preprocessing and Preparation

Once data sources are identified, preprocessing ensures that the data is ready for AI model development. Preprocessing tasks include:

-

Data Cleaning: Removing noise, missing values, and irrelevant features.

-

Data Transformation: Normalizing, scaling, and encoding categorical variables.

-

Feature Engineering: Creating new variables that improve model performance.

-

Data Splitting: Dividing datasets into training, validation, and test sets.

Consultants emphasize preprocessing because 80% of AI project time is spent on data preparation, making it a cornerstone of successful AI consulting.

Regulatory and Compliance Considerations

AI consultants must also ensure that the data meets legal and regulatory standards, including

-

GDPR compliance for handling personal data.

-

HIPAA regulations for healthcare-related data.

-

Industry-specific guidelines for finance, education, and government sectors.

Non-compliance can lead to severe penalties and reputational damage, underscoring the importance of data governance.

Data Governance and Security

Secure and well-governed data ensures ethical, compliant, and reliable AI solutions. Consultants recommend:

-

Implementing access controls and encryption.

-

Establishing audit trails for data usage.

-

Regularly monitoring and validating datasets.

-

Defining data retention policies and backups.

Governance not only protects sensitive data but also builds stakeholder confidence in AI initiatives.

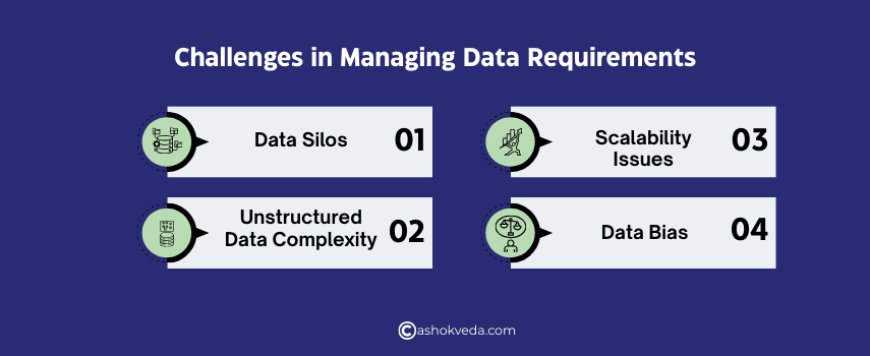

Challenges in Managing Data Requirements

AI consulting teams often face the following challenges:

-

Data Silos: Different departments store data independently, making integration difficult.

-

Unstructured Data Complexity: Extracting insights from unstructured data like videos or social media posts is resource-intensive.

-

Scalability Issues: Handling ever-growing data volumes without compromising processing speed.

-

Data Bias: Ensuring diverse and representative datasets to avoid biased AI models.

Overcoming these challenges requires careful planning, advanced tools, and skilled personnel.

Best Practices for Data Requirements in AI Consulting

To ensure AI projects succeed, consultants follow best practices for data requirements:

-

Start with Business Goals: Align data collection with business objectives.

-

Prioritize Data Quality over Quantity: Accurate, clean data leads to better models.

-

Ensure Data Diversity: Collect data representing all possible scenarios to reduce bias.

-

Establish Governance Frameworks: Define roles, responsibilities, and access controls.

-

Monitor and Update Data Continuously: Keep models relevant by feeding them updated datasets.

-

Invest in Scalable Infrastructure: Ensure storage and processing capabilities match data growth.

Following these practices improves model performance, reduces risks, and enhances ROI.

Tools and Technologies for Data Management

AI consulting relies on various tools to handle data requirements efficiently:

-

Data Warehousing: Snowflake, BigQuery, and Redshift for structured storage.

-

Data Lakes: Hadoop, Amazon S3, and Azure Data Lake for unstructured and semi-structured data.

-

ETL Tools: Talend, Apache NiFi, Informatica for extracting, transforming, and loading data.

-

Data Annotation Tools: Labelbox, Supervisely, and Scale AI for preparing training datasets for machine learning.

These technologies ensure that consultants can access, process, and prepare data efficiently for AI model development.

The Future of Data Requirements in AI Consulting

As AI adoption grows, the data requirements for AI consulting will evolve. Experts predict:

-

Increased reliance on real-time streaming data for predictive and prescriptive analytics.

-

Greater emphasis on ethical AI and bias mitigation requires more diverse datasets.

-

Integration of synthetic data to supplement real-world datasets.

-

Use of AI-powered data cleaning and preprocessing tools to accelerate project timelines.

Organizations that proactively address these evolving requirements will gain a competitive edge in AI adoption.

The backbone of every successful AI project is robust, high-quality data. Understanding the data requirements for AI consulting ensures that AI solutions are accurate, ethical, and scalable. By focusing on volume, variety, velocity, and veracity, businesses can prepare datasets that power AI systems effectively. Combined with proper governance, security, and preprocessing practices, these strategies allow organizations to extract maximum value from AI initiatives. As AI technology continues to advance, the importance of meeting evolving data requirements will only increase. Businesses partnering with skilled AI consultants who understand these data intricacies are better positioned to thrive in the AI-driven era. Properly prepared data isn’t just a requirement—it’s the foundation of AI success.